Reining in fully autonomous ‘killer robots’

The “Terminator” film made famous the words “I’ll be back” – and now self-directed robot warriors are on the way.

A science fiction-like arms race is quietly underway around the globe with the development of robots possessing the autonomy to decide who shall live and die in wars of the future.

Fully autonomous machines could be deployed on battlefields within decades, and debate over the moral, ethical and legal consequences of unleashing “killer robots” is heating up around the world.

Military planners envision artificially intelligent machines that could win future wars by deciding who is an enemy to be terminated, while saving human soldiers from fighting at a far cheaper cost.

Robots do not feel fear, and ones that can think autonomously will continue to fight, even if an enemy severs command communications, proponents say.

|

“The development and deployment of increasingly autonomous weapon systems is inevitable, and any attempt at a global ban will likely be ineffective.“ – Matthew Waxman, Columbia University |

“Advances in AI [artificial intelligence] will enable systems to make combat decisions and act within legal and policy constraints without necessarily requiring human input,” predicted a 2009 US Air Force report.

Little public debate has taken place so far about the consequences of robotic war-fighting technology with the ability to make “kill decisions”. Those against the idea of deploying such machines are raising the issue this month in the United Kingdom at the House of Commons, demanding an international treaty banning such weaponry.

However, some military planners and scientists say the technology’s rise is inevitable, and that instead of banning it, reasoned debate on the necessary safeguards must be held in order to safely guide lethal robot warriors into reality.

The future is now

So-called killer robots, such as drones and robotic sentry systems, are already widely in use. These weapons still rely on humans to decide whether or not to fire on a target. Fully autonomous weapons envisioned in the future, however, could function without human intervention.

Israel has already deployed the first autonomous weapon that pre-emptively attacks without human orders. The hovering drone called Harpy is programmed to recognise and automatically dive-bomb any radar signal that is not in its database of “friendlies”, reports New York Times columnist Bill Keller.

Israel also boasts the “Iron Dome” anti-missile system that automatically brought down hundreds of missiles fired from Gaza during the November 2012 Israeli-Palestinian conflict.

|

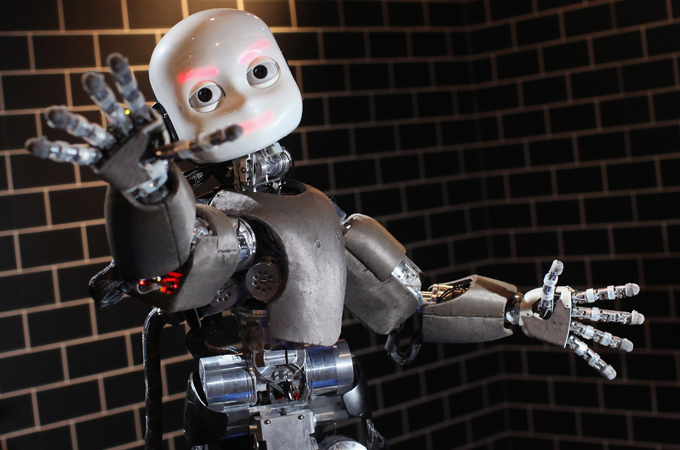

| An iCub robot built by the Italian Institute of Technology [Getty Images] |

Autonomous cars have been patrolling Israeli borders for years, said Hugo Guterman from Ben-Gurion University of the Negev, who helped design the “Tomcar”. It uses cameras, lasers and radar and “decides” what to do when surprises arise, he said.

Asked if the self-directed vehicles are fitted with weapons, “no comment” was Guterman’s reply.

The United States, United Kingdom, China, Russia, Germany, and South Korea are also busy developing autonomous robotic weapons.

“Recently the military verbiage has shifted from humans remaining ‘in the loop’ regarding kill decisions, to ‘on the loop’,” wrote retired US Major General Robert Latiff and author Patrick McCloskey recently.

“The next technological steps will put soldiers ‘out of the loop,’ since the human mind cannot function rapidly enough to process the data streams that computers digest instantaneously.”

Saving soldiers and civilians

Anti-autonomous weapons activists involved in the “Stop the Killer Robots” campaign will converge on London on April 23 to call for a pre-emptive international ban.

“Killer robots” will never possess the human judgment that limits civilian casualties during war, they say, and such machines could never be held accountable for war crimes.

Others argue, however, that it shouldn’t be ruled out in advance that robot soldiers cannot be designed to reduce battlefield deaths, destruction, and atrocities. The potential exists to create machines that won’t commit war crimes, such as summary executions and rape, in the heat of battle, they say.

Matthew Waxman – a law professor at Columbia University and fellow at the Council on Foreign Relations – told Al Jazeera people shouldn’t exclude the possibility of “positive technological outcomes” for self-directed robot fighters.

“The development and deployment of increasingly autonomous weapon systems is inevitable, and any attempt at a global ban will likely be ineffective,” Waxman said.

“Autonomous weapon systems are not inherently unlawful or unethical. Those judgments depend on how well they work, including the possibility that they reduce risk not only to soldiers but to civilians, too.”

|

| Sony’s autonomous dog robot called ‘Aibo’ [Getty Images] |

Professor Ronald Arkin from the Georgia Institute of Technology said a moratorium on self-directed weapons would be a better course of action than an absolute ban. He also pointed out the potential benefits of robotic warriors.

“It may be possible that these lethal autonomous systems could ultimately reduce noncombatant casualties in warfare over conventional human forces,” Arkin told Al Jazeera. “And if that could be achieved, I would contend there is a moral imperative to use them, as this could lead to the saving of innocent human life, much like the use of precision-guided munitions.”

‘Slippery slope’

The issue is contentious enough that the US Department of Defense issued a November 2012 directive outlining steps to ensure autonomous weapons “function as anticipated”, and “minimise failures that could lead to unintended engagements, or to loss of control of the system”.

Some observers warn the move towards autonomy in weapons could spiral out of control.

“The robotisation of warfare is a slippery slope – the endpoint of which can neither be predicted nor fully controlled,” wrote Professor Wendell Wallach from Yale University’s Interdisciplinary Center for Bioethics.

“Even if one trusts that the Department of Defense will establish robust command and control in deploying autonomous weaponry, there is no basis for assuming that other countries and non-state actors will do the same,” he added.

While human combatants often do horrific things to each other, many also exhibit battlefield compassion – something machines will never be able to mimic, some robot experts say.

“What if the potential target is already wounded, or trying to surrender?” asked Keller in his op-ed titled “Smart Drones”.

|

“Humans are nearing one of the most significant moments in our entire history: the point at which intelligence escapes the constraints of biology.“ – Huw Price, Cambridge University |

Can robots eventually be programmed to distinguish between an enemy and innocents, or proportionality in war?

Noel Sharkey, a professor of artificial intelligence and robotics at the UK’s Sheffield University, told Al Jazeera it is unlikely robot soldiers could possess the “sixth sense” that humans employ in combat situations. He said the idea that autonomous machines would perform more accurately and humanely on the battlefield was “hopeware, not software”.

“It’s about human judgment in the application of lethal force,” said Sharkey. “I can’t see any way that a computer system can apply to the laws of war.”

‘Existential threat’?

While fears of self-directed robots wreaking havoc on the battlefield have been raised, others have sounded the alarm about farther-reaching consequences of machines beginning to think for themselves.

What if the artificial intelligence of robots eventually becomes sophisticated enough to challenge their human masters?

While it’s the theme of many Hollywood science fiction films, the question is taken serious enough that the Cambridge University-based Centre for the Study of Existential Risk (CSER) is delving into it.

Huw Price, a Bertrand Russell professor of philosophy, is a CSER co-founder. Price said while it may be viewed as “a little flaky” to discuss whether robots could one day conquer humanity, with the rapid rise of artificial intelligence, it’s an issue worthy of consideration.

“What we’re trying to do is to push it in the direction of scientific respectability,” Price said of CSER’s work on artificial intelligence. “It is important to understand the range of possible intelligences that might arise in AI, especially any kind of runaway AI. Dismissing the concerns is dangerous.”

Price said while self-thinking robots may not threaten humans as portrayed in the “Terminator” movies, they could begin to direct resources towards their own goals, at the expense of human concerns such as environmental sustainability, thus threatening our existence.

|

| German soldiers walk past a ‘Cheatah VTE 3600’ [Getty Images] |

“It is probably a mistake to think that any artificial intelligence, particularly one that just arose accidentally, would be anything like us and would share our values,” Price said in an email.

“Humans are nearing one of the most significant moments in our entire history: the point at which intelligence escapes the constraints of biology. And, I see no compelling grounds for confidence that if that does happen, we will survive the transition in reasonable shape.”

Professor Sharkey is more sceptical about robots becoming out of control, however. “There is no evidence in current AI research [that it is possible]. Predictions about human-level intelligence has always been 20 or 30 years away since the 1950s. If it is at all possible, I think that it could be a very, very long time away.”

Professor Arkin also discounted the threat from artificial intelligence.

“This is a red herring – a classic Hollywood ‘Terminator’ vision – which is so far removed from near- to mid-term reality. It is not a concern anywhere in the near future in my opinion. It is simply a fear tactic used by proponents of a [autonomous weapons] ban.”